Kelly’s Kommentary on the 2023 Verzion DBIRRRRRRR

I enjoy the simple pleasures in life: a fragrant bouquet of peonies; crisp, caramelized plantains; my fluffy cat purring in my lap; and receiving an early copy of this year’s Verizon Data Breach Investigations Report (DBIR) to pour over and let my hot takes simmer in the early summer sunlight.

While this year’s report lacks any graphs that remind me of “butterfly vomit” or “a parrot on miscellaneous bath salts”, I still found myself enjoying it. The “spaghetti charts” are not your simple curved line graph but instead are VIBING, which both my aesthetic and economic soul appreciates (the latter due to the vibe-vibes reflecting confidence intervals). The footnotes are also a treat and keep what otherwise might be an oppressively dry report feeling like a breeze to read. Verizon DBIR? More like Verizon DBIRRRRRRRRRRRR.

But the point of the report is the data within and while not much changes year over year, a few things stood out to me. This post shares my notable thoughts, questions, and (spicy) commentaries on the 2023 DBIR.

Cash Rules Everything Around Us

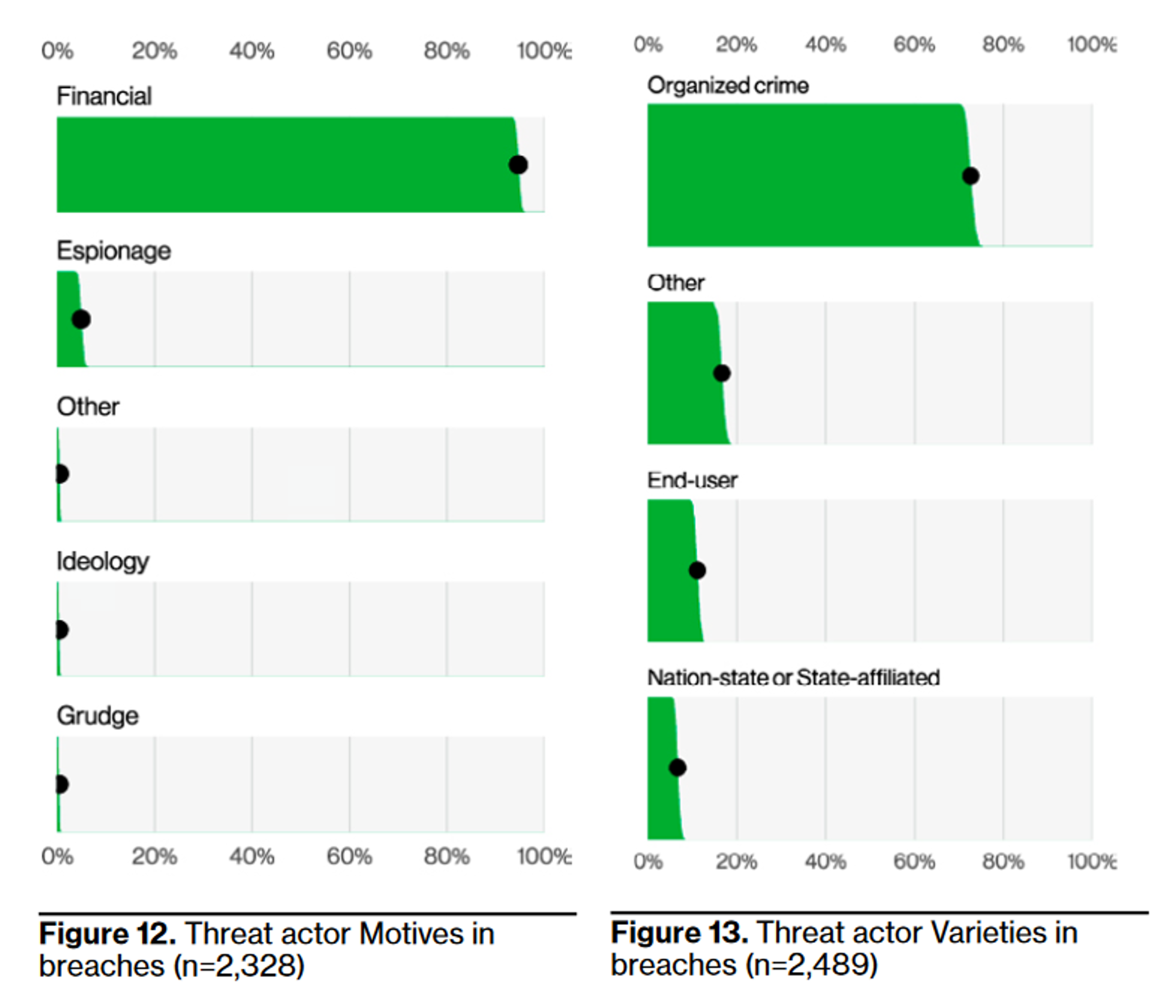

Yet again, the data shows 94.6% of breaches are financially driven. Our oft-aggrandized advanced persistent threats (APTs) – the nation states who largely compromise for espionage purposes – are simply not the bulk of activity. Indeed, organized crime is 75% of all “threat actors” in breaches, followed by “Other” and “End-user” (the latter also including mistakes, not just purposefully malicious activity – a lumping together I personally dislike) with nation-state dead last.

It’s a reminder that when we invest effort in security, we should focus on the potential paths that require attackers to invest the least; if they’re overwhelmingly motivated by money, then they will be sensitive to return on investment (ROI) 1. But we should also think about what costs us the most money – not just in terms of direct costs from the compromise (like money wired away or incident response retainer fees) but also the costs of investing in mitigations for it – and opportunity costs, too, like hurting productivity due to security mitigations.

And it’s a reminder that the best way to hurt attackers, whether at local or macro scales, is to poison their ROI. One of my hobbies is brainstorming ways we can aggrieve attackers and force them to waste time and money, but, fun aside, there is wisdom in the idea that applying an economic lens to security benefits us.

Double Double Pretexting and Trouble

I’ll admit I rarely think about pretexting and so when I read it in the report this year, my first thought was that it must be something like the new “first base” for the chronically online – but my confusion grew when the report said it’s commonly used in “bacon egg and cheese” sandwiches, which is what any New Yorker knows “BEC” means.

But no, pretexting is when an attacker crafts a compelling scenario to trick victims into doing something for them – like changing bank account details or sending money via a wire transfer (and BEC stands for “business email compromise,” which is much less delicious). Phishing will have something like an attachment or link the attacker wants you to click, while pretexting is more like the Nigerian Prince scam but without the purposefully incredulous elements.

What I found interesting is that attackers are using email access to insert themselves into existing email threads to ask the victim to perform some sort of task (like updating bank details that mean money will route to the attacker). I spend a lot of time wishing I were excluded from email threads, so in some sense I respect the hustle and grind by attackers here.

Like, let’s take a step back. Attackers are basically simulating the most tedious, soul-sucking aspect of corporate life for profit and I’m pretty sure they’re still making less per capita than the people who do that for their day jobs – possibly expending more effort, too. Is the prevalence of “bullshit jobs” what they’re really exploiting? I just can’t imagine willingly joining the mundane, lifeless coordination dance millions of workers perform daily, because no amount of crimes makes that sexy.

It also doesn’t feel particularly scalable? From what I understand, purchasing email creds from the deep, dark web2 is not expensive – but surely accessing the inbox, finding appropriate email threads for inserting your request, typing a context-appropriate email, etc. involves quite a bit of effort?

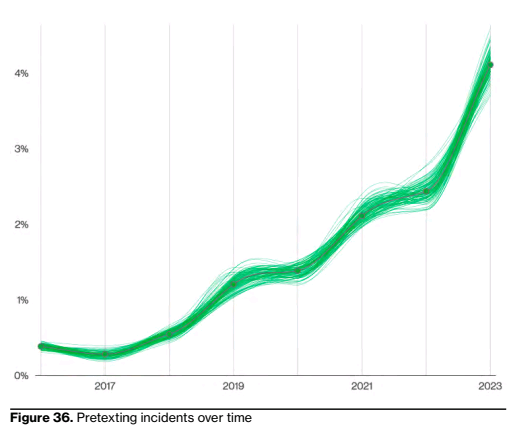

Yet, it starts to make sense when we look at the payout. The median transaction size for a BEC incident is now $50k (up from ~$30k in 2020), which, as we’ll see in the next section on ransomware, is 5x the median ransomware transaction size. Is the effort and resource expenditure involved in pretexting -> BEC vs. ransomware 5x as much? Maybe? Criminals leverage automation to great effect in ransomware operations, after all.

I don’t like making bets, so I’m eager to see what the data indicates next year; if pretexting/BEC continues to rise, and ransomware stagnates or falls, then it suggests criminals pivoting to the monetization path with higher ROI.

Ransomware at the Plateau of Productivity?

Speaking of ransomware, one shocker in the report is that ransomware didn’t grow year over year – staying at 24% of breaches – despite what crowing headlines would have us believe. Does this suggest attackers have perhaps hit a “plateau of productivity” with it? Or are there simply higher ROI options (like Pretexting) that is dampening its adoption / expansion by criminal organizations?

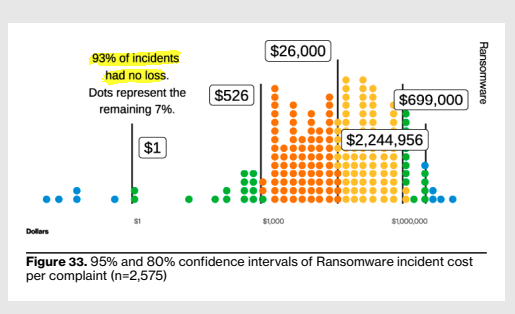

What may shock many is that 93% of ransomware incidents had no loss (which is a little higher than last year but I know it surprised many people last year, too). We constantly hear that ransomware could be a company-ending compromise; it’s already a myth that tons of businesses (especially small ones) go out of business due to breaches and, based on this data, the financial loss from ransomware is unlikely to lead to bankruptcy for the vast majority of organizations.

The 95% range of ransomware losses is now $1.00 (you can’t even get a slice of pizza in NYC for that anymore) to $2.25 million. At that extreme end of the scale, the smattering of tools to protect against it are well worth the cost (assuming the companies who faced losses that high weren’t already using those security tools, which is not revealed by this data but I would very much like to know). The median loss, however, is $26,000. For a year’s worth of EDR subscription, $26,000 covers something like 350 endpoints (assuming zero additional costs beyond the sticker price)… and that doesn’t even help you with recovery like backups…

What’s especially intriguing is that the median ransomware payout is decreasing while the median loss is increasing. Is it, as the report suggests, because ransomware campaigns are going after smaller companies? In truth, I’ve heard the opposite – that criminals are targeting larger organizations in hope of a bigger median payout. The report also suggests the higher loss amounts might be due to the recovery costs, which feels more plausible. I’m open to clues as well as alternate theories about this one.

Mandatory Log4Shell Mention

Attackers, perhaps unsurprisingly, tried to capitalize on companies not patching Log4j, with 32% of all scanning during the year conducted within 30 days of its release. Yet, overall, Log4Shell didn’t have the breach impact we might expect: it was mentioned in 0.4% of the incidents in their data set (“just under a hundred cases”). I think we can interpret this as a victory for defense, especially thanks to the tireless efforts of SREs and security engineers to patch and otherwise protect against it.

One thing that does fascinate me is that 73% of the Log4j cases in the DBIR’s data set involved Espionage, with 26% involving Organized Crime. To me this suggests one of two things (or both): first, that both nation states and criminal organizations tried to leverage Log4j but the nation states were more successful in achieving compromise; or, that nation states seized the opportunity to blend in with criminal activity so successfully that they actually dominated the activity (which could make sense given they were faster to operationalize exploits for it).

Side rant about SBOMs

There’s SBOM propaganda at the end of the Log4j section which is sad because there are better recommendations they could have made and it feels like pandering to one of their key data providers rather than a thoughtful analysis of what might help readers the most. SBOMs may tell you where the software exists (if you can wrangle the deluge of JSON they entail) but it doesn’t help you take action. You could easily have SBOMs and still not patch critical vulns for 49 days (the median time they cite in the report).

The problem, from what I’ve seen, is very rarely awareness of a vulnerability; the issue is patching being a confusing, manual process or being worried about patches breaking things in prod or, relatedly, not having sufficient testing to feel confident in deploying the patch. Yes, in the Log4J scenario, some security teams scrambled to figure out “where is log4j in our stack???” but I also know of multiple software engineering teams who gave their security teams that info posthaste and yet it still didn’t get patched quickly (sadly, often due to sociopolitical reasons). I agree SBOMs may offer an asset management value prop, but we must always be careful to ask “okay, now what?” when we think about what value data might grant us.

SBOMs do not answer “okay, now what?” Repeatable change processes, including testing, answers that. Speedy, automated CI/CD answers that. I’ve discussed this at length elsewhere and I’m tired of standing on my soapbox, so let’s move on.

Don’t Roll Your Own Mail Server

Another thing I found surprising is that 41% of breaches involve mail servers (not just the sending or receiving of email). Why are you still running your own mail server? It’s not only a pain in the ass but a recipe for deliverability problems, too, aside from the security issues that arise. We often hear security is the enemy of convenience but rolling your own mail server is both inconvenient and insecure. I do not get it.

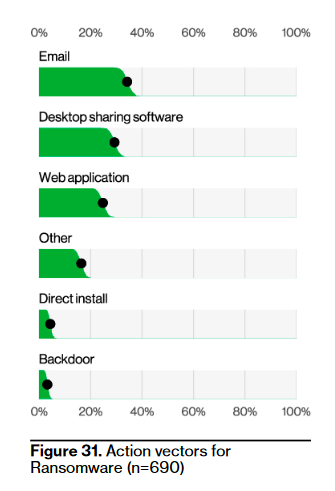

Desktop Sharing isn’t Caring

How many organizations still use desktop sharing software? The answer seems to be a lot given its prominence in these breach statistics… but does any employee even like desktop sharing software?

I have to call out Microsoft here. Android, ChromeOS, iOS, and macOS all require user permission for desktop screen capture or control – and display a warning dialog – but every running Windows application can snoop on the screen content, log keyboard presses, and inject user input without any sort of opt-in.

Alas, those same features allow organizational leadership to surveil their employees’ activity, and Microsoft can make money by supplying those capabilities. Even if requiring consent via warning dialogue would make a dent in this widespread attack problem, I’m doubtful they’ll remove the snoopability because then they’d sacrifice potential revenue. Perverse incentives like this make me want to flee to a cottage in a woodland grove with my cats and forsake snarky infosec commentary for a simple life of writing philosophical novels.

Delays on the Supply Chain Train

We’re inundated with FUD about the software supply chain and all the horrors skulking within it but the data in this year’s DBIR suggests we might be investing too much of our feels in this problem. Based on this data, the most common “threat” from using third-party software is that their dev or admin credentials are compromised and the attacker then assk you to send money to the wrong place – and that would only apply to commercial vendors, not open source ones (although I’m sure open source contributors would love you to randomly send them money, they usually don’t ask for it).

Sure, there’s the “exploit vuln” category but that can include vulnerabilities in first-party and third-party code, so it’s difficult to tease apart the impact of “supply chain” specifically. And, regardless, “exploit vuln” comes well after use of stolen creds, “other”, ransomware, phishing, and pretexting. It’s about on par with “misdelivery” and, in fact, dropped from 7% last year to 5% this year.

If we think about the upstream attacks that especially spook us, where an attacker gains access to the supplier’s source code and pushes a malicious update, the data suggests its prevalence is miniscule – so much so that the DBIR excluded “Partner and Software update” as an action vector this year and even lumps “Backdoor / C2” together.

My hot take is a lot of this comes down to how the human brain works and ego. Humans prefer the world being a straightforward, orderly place. Throw rock up, rock come down. We invented religions, in part, to cope with the complexities of the world, like: “I planted the seeds in the ground but we haven’t gotten rain, therefore the gods are angry and we should offer sacrifices to them” or the more basic “[insert deity here] has a plan.”

We really do not like acknowledging that stochasticity slithers throughout all things, and we recognize, quite correctly, that the more things there are interacting in a system, the more likely the outcomes will baffle us. “Attacker sends email with link, human clicks on link, malware downloaded” is actually a pretty linear story involving few components.

But when we look at the sprawling graph that is our software ecosystem, we have no chance of comprehending it in full – and that complexity terrifies us. We don’t feel in control. Even if most of the time things go right (which is the case with software) and that complexity helps us achieve otherwise impossible outcomes, our lizard brains are still like “MANY BIG SCARE!” because we can’t comprehend it naturally in our brains. The emergent interactions shock us, unlike when a user clicks on a link or even wires money to the wrong bank account.

That’s the “how human brains work” part of my explanation for the furor over supply chain security of late. But there’s often a tinge of moral outrage in the supply chain discourse – that vendors “know better” and are “negligent” and other denigrations. This is where ego comes in. My hot take is that certain entities felt extremely embarrassed about the SolarWinds incident and, because they can’t invent a time machine and go back to the 80s and insist that software should continue to be built primarily in-house3, they decided to shame the private sector instead.

Let’s be real, software quality usually dies in any incumbent vendor required by compliance and regulations. I find it hilarious that in the same breath as “how dare you” they also recommend vendors that offer “zero trust” things (usually in name only), as if those also won’t make for delicious targets for nation state adversaries. By far the best targets for attackers from a software supply chain perspective are the tools required to meet regulatory requirements. There is very little incentive to invest in quality or innovation when you sell into a sticky budget line item.

But you can’t exactly shame the incentive paradigms beget by compliance requirements4, so here we are. And from a private sector perspective, it’s really convenient for security leaders to claim that the real problem is this software spaghetti monster of societal proportions. No Board of Directors can expect you to tackle that problem, right? So, yet again in the infosec industry, we have this admittedly elegant symbiosis where we all avoid accountability and shout a lot and pretend like we’re making progress – and, even better, we get to gain a sense of self-righteous superiority in the meantime.

To be clear, the Verizon DBIR says none of this and if they ever did I’d expect it to be their last report given how much of their data comes from various government entities and incumbent security vendors. I say it because I still don’t know how to sell out (please help me).

Conclusion

This year’s Verizon DBIR is worth the read to challenge your assumptions and ponder the data. To any vendors reading, please consider contributing to the data set (and no, Verizon isn’t paying me to say this). Realistically, this is the best aggregate of breach data we have in the private sector and, as the report notes a few times, there is sampling bias due to their sources. The greater diversity of sources, the clearer story we can craft of what is actually happening in attack land vs. the fan fiction we normally read about and pretend helps us.

Enjoy this post? You might like my book, Security Chaos Engineering: Sustaining Resilience in Software and Systems, available at Amazon, Bookshop, and other major retailers online.

-

Nation-states are also sensitive to ROI but their payoff is often less quantifiable than money is. How do you assign value to classified documents about upcoming trade negotiations? Actually, this is a challenge I’d very much enjoy tackling in another life but it’s safe to say that quantifying it is harder than quantifying money. ↩︎

-

After playing Tears of the Kingdom for a bit, I now imagine the deep, dark web to be a chasm filled with Ganondorf’s gloom. ↩︎

-

Even Microsoft, the formerly big bad anti-OSS bogeycompany, builds much of their stack on OSS now. ↩︎

-

I mean, I have and I do shame incentive paradigms – and I find it fulfilling af – but I’ve long understood I am a stranger in a strange land. ↩︎