The SUX Rule for Safer Code

This is kind of a dumb post but it amuses me and maybe will help some people somewhere. It proposes a rename of Chromium’s brilliant “Rule of Two,” which I love because it is a very handy heuristic for software engineers but also hate because it co-opts a term from Star Wars lore that is not even close to related. Instead, I think it should be called “The SUX Rule.”

Let me explain.

Rule of Two: Sith Edition

Let’s start with the Star Wars version of Rule of Two because I am a lore nerd (a lored, if you will) and while Star Wars lore isn’t as glorious as Elder Scrolls lore,1 it’s satisfying enough for me to defend.

The Rule of Two was a decree established by Darth Bane as part of the persistent Sith plot to exact revenge upon the Jedi Order. It states that only two Sith Lords may exist at any given time – maximizing secrecy – consisting of a “master” and “apprentice”; the apprentice was generally expected to murder their master after gaining enough experience2.

The most famous example of the Rule of Two is Darth Sidious – aka Senator Palpatine3 – as master and Darth Vader as apprentice. It is taking all my self-control not to unleash more lore, so let’s continue.

Rule of Two: Chromium Edition

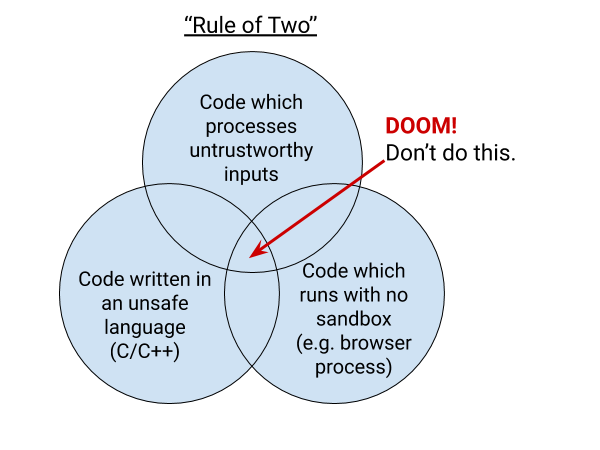

Now, Chromium’s Rule of Two does not involve writing two code modules, one which will eventually kill the other after running in production long enough. Chromium’s Rule of Two states that we should never write high privilege components in an unsafe implementation language if they process untrustworthy inputs.

It is an excellent rule, and as their diagram below visualizes, when code has all three problematic characteristics – runs without a sandbox; written in an unsafe language; processes untrustworthy inputs – it can spell doom.

However, I am also a bit of a language nerd and definitely a behavioral nerd and I’m dissatisfied with Chromium’s Rule of Two on these petty grounds. The “Rule of Two” isn’t very memorable when it isn’t about Siths and treachery. We want developers to think of this rule when designing systems, so it needs to be salient.

And I personally find terms like “untrustworthy inputs” too squishy; it can imply that we’ve done some sort of analysis to judge inputs as untrustworthy. The Chromium team is very smart and carefully defined what they mean by it in their post but human recall is flawed, especially when it comes to nuance.

The SUX Rule

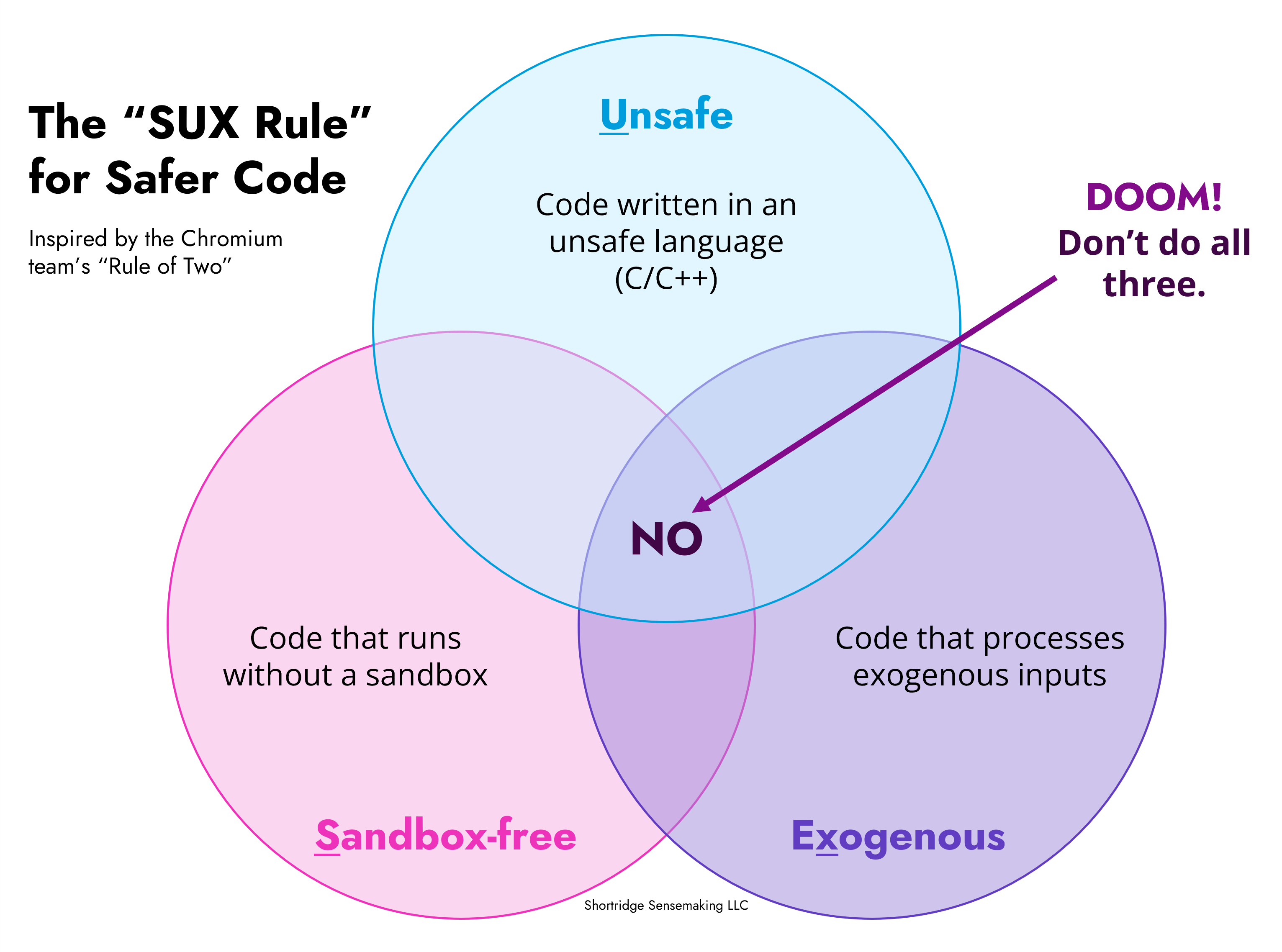

Instead, I propose the SUX Rule: Sandbox-free – Unsafe – eXogenous. If our code runs without a Sandbox and is written in an Unsafe language and processes eXogenous code, then that obviously sucks (i.e. SUX). We don’t want our code to suck4. Thus, we want to pick no more than two of these sucky things when we write code.

If we don’t process exogenous data like user input5, then maybe it’s fine to write it in C or not use a sandbox. But if we do process exogenous data, then we either want a runtime sandbox (“privilege reduction” in Chromium’s lingo) or to write it in a memory safe language (Rust being the most direct replacement for C)6.

I like exogenous since it feels less squishy than “untrustworthy inputs.” With “running without a sandbox” and “memory unsafe language,” there is a clear way to know whether you are doing things right (you apply a sandbox or you don’t use C/C++). How do you know whether your inputs are actually trustworthy? If you say, “I trust the input now,” why?

If we flip this to the attacker’s perspective, they start with data they control and then will encounter the gifts of either C/C++ or sandbox-free execution. They would greatly prefer to not have any “real” checks performed on their data leading up to that point. But what are “real” checks? I feel like “exogenous” clarifies this a little, since it makes it less about trust or not and more about how to handle it.

Exogenous turns it into a question of: “Do we have things coming from the network / outside the component?” If yes, either sandbox or memory safety (or, even better, both).

Realistically, the X – exogenous data – is the characteristic you can’t eliminate. A lot of code interacts with the outside world, whether human users or silicon services, and that is part of its intended functionality. That is kind of the whole point of many software components.

For many projects (most?) it will be easier to throw that code in a sandbox or write / refactor it into a memory safe language rather than acquiring cryptographic proof that the data comes from a trusted entity or transforming the data in specific ways (which the Chromium post details). The SUX Rule can prompt us to recall this thinky thinky during the design phase.

Conclusion

The SUX Rule is literally just me rebranding Chromium’s wonderful Rule of Two and changing terminology a bit to clarify the concept, avoid stepping on any Sith toes, and make it more memorable (since I’ve found not many software engineers even know of it, to my dismay).

My hope is that even if you don’t entirely remember what each letter stands for, perhaps you will remember “we don’t want code that SUX” and then you will look it up to refresh your memory. With any luck, a few years from now we’ll find that less of the world’s code SUX.

Enjoy this post? You might like my book, Security Chaos Engineering: Sustaining Resilience in Software and Systems, available at Amazon, Bookshop, and other major retailers online.

-

It does not get much better than the Thirty-Six Lessons of Vivec, Sermon Seventeen ↩︎

-

An example is the oft-memed Tragedy of Darth Plagueis the Wise. ↩︎

-

“Somehow, Palpatine has returned,” is dialogue that haunts me daily. There are few things in life that have disappointed me as much as hearing those words in the theater. ↩︎

-

If the internet is full of code that SUX, does that make it a SUXnet? (☞ ͡° ͜ʖ ͡°)☞ ↩︎

-

The Chromium team frames an untrustworthy source as “any arbitrary peer on the Internet” which I feel the word “exogenous” captures. ↩︎

-

Totally not me mentioning Rust for SEO. ↩︎