The Security Obstructionism (SecObs) Market

Obstruction is “a thing that impedes or prevents passage or progress; an obstacle or blockage.” Under this definition, I would include sabotage – deliberate obstruction or damage – as well as passivity, or what I like to term “aggressive passivity” – deliberate non-interference to actuate an undesirable outcome (the “sink or swim” approach).

In security, obstructionism foments the dreaded Department of No, the begrudged gatekeeper, and the truculent Security Theatre1. Hence, I am introducing the term Security Obstructionism (SecObs)2, a category of tools, policies, and practices whose outcome is to impede or prevent progress for security’s (speculative) sake. I suspect the TAM (total addressable market) for SecObs is enormous and perhaps provides a more coherent understanding of security stacks than traditional market categories.

But outputs aren’t outcomes3. The amount of activity performed by a team is not equivalent to productivity nor its ability to produce desirable outcomes4. The secret to the SecObs market is that this does not matter. The point of SecObs is not better security outcomes for the business or end users. The point is more security outputs as a proxy for progress and these outputs impart more control over the organization, transmogrifying into power and status. In essence, the point is a self-perpetuating organism5.

How does the infosec organism self-perpetuate via SecObs? Here are some examples (which perhaps can be called “Indicators of Obstructionism” or IoOs6):

- Vulnerability management that creates long lists of things to triage (and outside of dev workflows)

- “Shadow” anything – shadow IT, shadow SaaS, shadow APIs…

- Manual security reviews, manual change approval – basically anything to block shipping new code unless security personally gives the go ahead

- Internal education programs that are not based on measurable security outcomes (the primary outcome is wasted time)

- “Gotchya” phishing simulations that primarily measure the efficacy of phishing copy rather than the efficacy of security strategy

- Password rotation, key rotation, access approval, and other policies that may leave internal users unable to work

- Corporate VPNs, whose most effective use in 2022 is perhaps as the entry point for Ransomware-as-a-Service operations

- Shutting down modernization initiatives (cloud, microservices, etc.), slowing adoption of new tech or tools7

- “Insider threat” detection that bears a remarkable resemblance to malware (such as using keyloggers8 and screen recording9…)

- Petulantly allowing “self-serve” security… but without providing recommended patterns or guidance

- As soon as a security property or feature is embraced by the rest of the organization, it no longer “counts” as security – like how I’ve heard SSO recently labeled somewhat pejoratively as “convenience” vs. security by some infosec professionals…

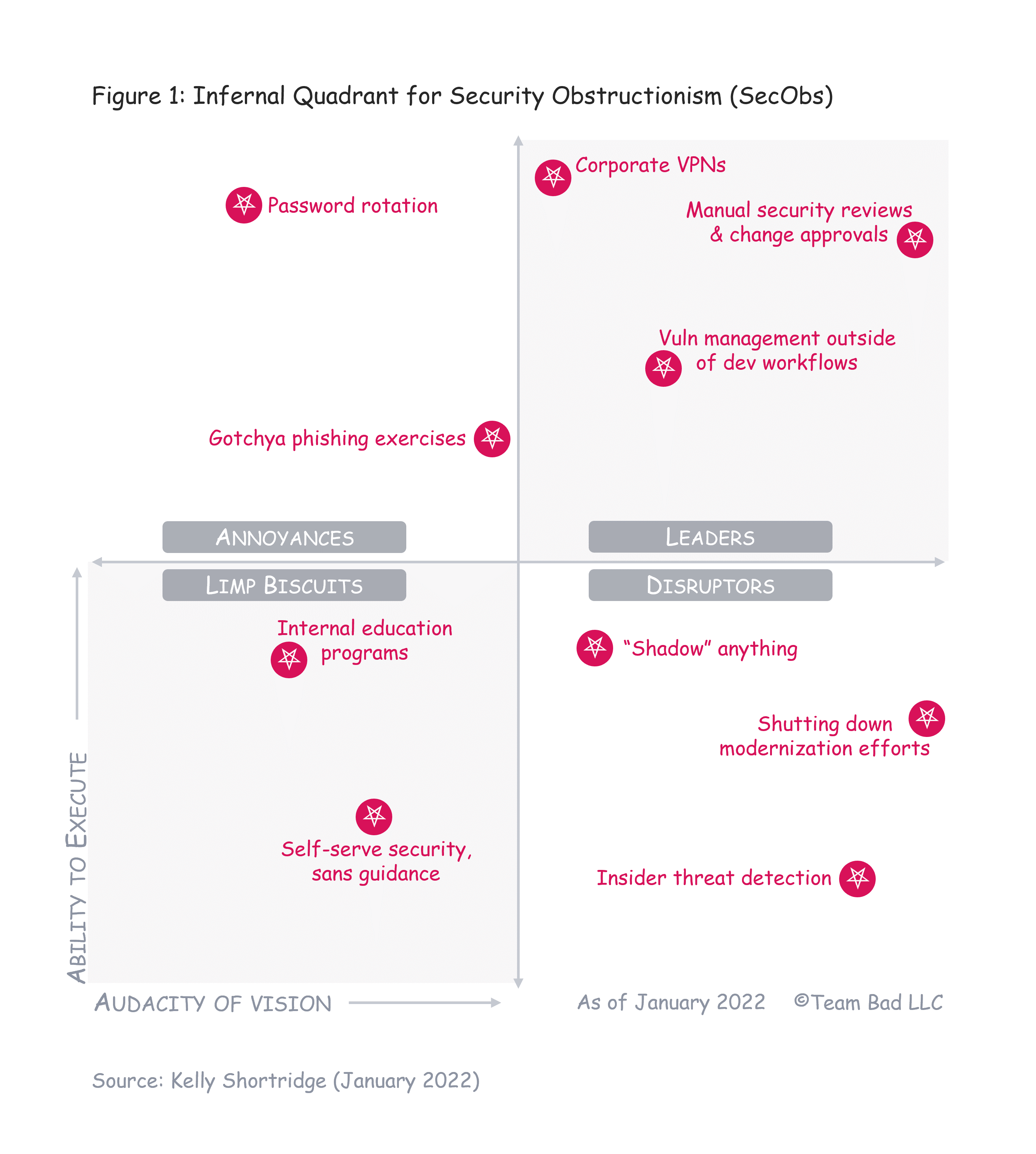

As seems necessary to legitimize a market category, I plotted SecObs on a market “graph”10:

Astute readers might have noted that some of these SecObs practices fall under the purview of “DevSecOps,” which is basically SecObs disguised in a hoodie, AllBirds, and Fjallraven backpack (carrying a Macbook). The typical definition of DevSecOps is that security is integrated across the software development lifecycle11 and it is an unobjectionable goal. The problematic part is that the goal is twisted from a thoughtful entwining of things that could promote systems resilience from design through to delivery into a goal of security reasserting itself as an authority.

But even the typical definition of DevSecOps does not justify its existence as a term; we would also need DevTestOps, DevQAOps, DevComplianceOps, DevAccountingOps, DevGeneralCounselOps to ensure that their concerns are also considered or built-in throughout the SDLC (as they should be!). Ergo, DevSecOps is quite literally Security Obstructionism in the linguistic sense but lamentably in the living sense, too – inserting security into the equation to impede Dev from meeting Ops.

The tragedy of SecObs echoes across hype cycles: bad security ideology mixed with bad incentives leads to bad implementation which leads to bad outcomes in all dimensions except the justification of status quo security people’s existence. Implementation is manipulable and can pervert even noble goals into SecObs. For instance, “shift left” is the purported mantra of DevSecOps12, but what it means too often in practice is shifting obstructionism earlier into the development process.

There are arguably some psychological benefits of ensuring expectations of timely software releases are destroyed sooner rather than later13, but the more tangible outcomes are that the obsession with preventing failure happens in more places. As a result, there are more outputs and more opportunities for finger pointing and citing “you did not follow process at X, Y juncture” when something goes wrong. Because SecObs is a defense mechanism, perhaps the most effective one in status quo security’s arsenal.

Obstruction is ultimately about preservation. Nobody obstructs something from happening if they want things to change. If security wanted things to go the DevOps way, they would understand that security, like resilience or reliability or maintainability14, is part of the outcomes that DevOps practices aim to achieve – and that there is immense value in ensuring all those qualities can be nurtured as a check against myopic organizational pressures15.

The problem is, if security is already one of the qualities that orgs doing the DevOps are trying to achieve, then that means the status quo will change. Software engineers, architects, and SREs will identify where existing security programs are falling short of desired outcomes and pioneer new programs to solve these challenges using their expertise as builders.

They will develop strategies to perform asset inventory, testing, and patching their own way – one that likely treats bugs as bugs, regardless of whether there are performance or security implications16. That means the security team is no longer performing these actions, which is one less thing to point to when proving the security strategy is “successful.” Never mind that security could absolutely still be essential by providing recommended patterns, serving as an expert sounding board, operating like a platform engineering team to build foundational systems to make security work easier, or leading security chaos engineering experiments17. Security professionals will be expected to design systems – a daunting shift that can catalyze existential panic about whether existing skill sets actually matter.

I suspect the lizard brain18 origin of SecObs lurks within in-groups and out-groups19. The information security establishment has seen itself as a marginalized outsider, a scorned prophet, an unfairly resented authority figure who is just trying to keep the devs from sticking forks into electrical sockets. Humans like seeing members of their in-group succeed, but they love seeing members of the out-group fail (or at least suffer)20. Humans will sacrifice the greater good – or even more for themselves in a bigger but equally-divided pie – if it means the out-group gets less than they do21.

Adopting SecObs is how infosec can ensure the out-group(s) receive less. I have long been perplexed why some security professionals are quite so resistant to my thinky thinky – how could they so detest and resent something that extirpates toil for them so that they may stretch out their strategic wings to soar on the zephyrs of innovation? I’ve felt like they must see me as K-2SO saying “Congratulations! You are being rescued! Please do not resist.”

What I realized is that it does not matter if Security Chaos Engineering22 or all the other things23 I’ve proposed make these infosec traditionalists better off; what matters is that they feel that they are worse off on a relative basis24. SecObs makes everyone else in the organization miserable and puts them at least partially under infosec’s thumb. Therefore, even though it is a woefully inefficient use of the security team’s time, SecObs makes infosec better off on a relative basis – if not equals with engineering, at least able to directly impact their outcomes; if not fulfilled by their work, at least they aren’t the ones facing a Kafkaesque imposition on their workflows.

SecObs depends on the definition of “secure” remaining nebulous and unquantifiable. The argument for DevSecOps is that “while DevOps applications have stormed ahead in terms of speed, scale and functionality, they are often lacking in robust security and compliance.”25 But what does “robust security” even mean? The justification for Sec being treated as an equal among Dev and Ops is based on wielding the abstract ideal of “security” as a Maginot Line. If you ask what a “secure” app means, you will rarely receive an actionable or consistent answer. Is it free of bugs? Does it never experience failure? Or is it something that only security teams and security teams alone can identify, akin to “I know it when I see it”26?

Conceiving concrete security outcomes is not an arcane art, despite what the industry inculcates. An engineering team’s product is broken if they aren’t meeting relevant compliance standards. If they are addressing the retail industry, they can’t have a product if they aren’t PCI compliant; their customers quite literally cannot purchase it. The same is true for financial services or healthcare.

And in my recent experience27, SREs think about impacts to availability more than security people, despite availability being the A in the classic C.I.A. triad (which just turned 44 years old a few months ago)28. A cryptominer can impact stability and reliability in production – which jeopardizes the organization’s ability to conduct its business. An attacker exploiting a web vuln and crashing the machine causes downtime. Exfil of customer data can cause latency and result in compliance sanctions.

SecObs spends all its time fretting about preventing incidents and implementing tools and policies that impede business operations29 under the guise of collapsing the probabilistic wave function of failure to zero while spending very little time actually preparing for the inevitable incident.

But if an attacker cuts down an application in a hosted container forest and it automatically disappears and grows again, who cares? The impact is negligible, just as it should be if security is done well – if security is focused on outcomes rather than outputs. All that prevention just doesn’t matter if you can’t recover quickly when something bad happens, which it will. And what was the point of holding up a release by a week because the security team wanted to personally inspect it first for bugs when the impact of exploiting those bugs is “autoprovision another container to replace the compromised one”?

What is to be done?30 When you see or hear weasel words like “appropriate” or “sufficient” or “robust” to describe a “level” or “maturity model”31 of security, it is worth pausing to ponder whether SecObs abounds. If the security program evokes the on-prem monolith and waterfalls era – SAST, DAST, vulnerability management, security reviews and approvals – but is festooned with the words “continuous” or “automated”, then perhaps what is now automated and continuous is SecObs. When you hear “we need to bake security into [thing]”, it could be a boon – weaving security into workflows to make it consistent, repeatable, and scalable – but it could also be a leading indicator of SecObs; engineering teams will be left to sink or swim because when they fail, it’s an opportunity for SecObs to say, “See why you need us?”

SecObs is pernicious; it is deeply rooted in the information security swamp and will be difficult to excise from the industry. DevSecOps may be its modern incarnation, but SecObs is a larger problem that existed before this trend and will continue to exist after it wanes. SecObs carries a massive TAM – at least $10 billion and likely much higher32 – which means there are legions of incumbents incentivized to actively fight against its removal.

The strategies I have seen work are either to burn down the status quo (quite literally firing obstructionists and building anew) and / or to support motivated software engineers and architects building overarching security programs in parallel, which treats patches like other upgrades and attacks like other incidents. Security is treated as a facet of resilience, as it should be because it reflects reality33.

What I have never seen work is attempting to modernize SecObs, which is what we are seeing with too much of the DevSecOps movement. Getting status quo security pros up to speed on the latest technology just means they’ll now be able to weaponize it and talk about the dangers of shadow APIs and shadow Infrastructure as Code and shadow functions.

Because getting up to speed in the SecObs market isn’t about understanding the technology – how it works, its strengths, its potential, its deficiencies, its concerns – it’s about understanding the power dynamics of it and figuring out where security can best assert itself to maintain control in the organization. Find a vulnerability, exploit it, persist in the system… maybe the only difference between the “good actors” and the “bad actors” is that the bad ones make money for their organizations.

Thanks to Camille Fournier, Ryan Petrich, Greg Poirier, Andrew Ruef, James Turnbull, and Leif Walsh for feedback on this post.

Enjoy this post? Stay tuned for the full Security Chaos Engineering book later in 2022. In the meantime, you can read the Security Chaos Engineering report in the O’Reilly Learning Library.

-

For a theatrical discussion of Security Theatre, I recommend my keynote Exit Stage Left: Eradicating Security Theatre: https://www.youtube.com/watch?v=kiunphALNKw ↩︎

-

I will use SecObs throughout this post since pithy buzzwords seem to be infosec’s perpetual zeitgeist. ↩︎

-

While I recommend reading her book in full, this lucid interview with Dr. Forsgren cites examples of outcomes vs. outputs in the context of software engineering: https://www.techrepublic.com/article/how-to-measure-outcomes-of-your-companys-devops-initiatives/ Book citation: Forsgren, PhD, N., Humble, J., & Kim, G. (2018). Accelerate: The science of lean software and devops: Building and scaling high performing technology organizations. IT Revolution. ↩︎

-

Forsgren, N., Storey, M. A., Maddila, C., Zimmermann, T., Houck, B., & Butler, J. (2021). The SPACE of Developer Productivity: There’s more to it than you think. Queue, 19(1), 20-48. https://queue.acm.org/detail.cfm?id=3454124 ↩︎

-

This could perhaps be called the Tumor Model of Information Security. ↩︎

-

Perhaps later in 2022 we will see a YARA scanner for IoOs raise a $100 million Series A with a $1 billion post-money valuation on $1 million ARR. ↩︎

-

New and not-quite-compliant on everything is much better than old and unmaintained but compliant. The inability to update a system should terrify security; alas, in many cases, it is an afterthought or worse, seen as a comfort. ↩︎

-

Usually vendors won’t say “keylogging” explicitly but will use euphemisms like “keystroke dynamics”, “keystroke logging”, or “user behavior analytics”. As CISA explains in their Insider Threat Mitigation Guide about User Activity Monitoring (UAM), “In general, UAM software monitors the full range of a user’s cyber behavior. It can log keystrokes, capture screenshots, make video recordings of sessions, inspect network packets, monitor kernels, track web browsing and searching, record the uploading and downloading of files, and monitor system logs for a comprehensive picture of activity across the network.” See also the presentation Exploring keystroke dynamics for insider threat detection. ↩︎

-

CISA’s description of UAM tools (see citation 8) also notes the ability to “make video recordings of sessions” as a general capability of insider threat tools. As an example of a vendor that isn’t quite as shy about it: https://www.proofpoint.com/us/blog/insider-threat-management/what-advanced-corporate-keylogging-definition-benefits-and-uses ↩︎

-

Unlike other entities purporting to analyze markets, the Infernal Quadrant is actually a quadrant because it is a plane divided into four infinite regions. It is hard to imagine something less magical than constraining infinite regions by bounding them in a larger square, although this perhaps exposes the underlying principal problem: fitting everything into neat boxes and calling them something they’re not. ↩︎

-

Each vendor defines DevSecOps in their own way, but this is the definition that stays constant across most of them. Some vendors additionally highlight automation, some focus more on shift left, some suggest security processes are handled by devs, and some talk about “enabling development of secure software at the speed of Agile and DevOps”. ↩︎

-

Although IBM refers to “Shift Left” as a mantra rather than the mantra, suggesting that the collective noun for DevSecOps is a mantra of DevSecOpses. ↩︎

-

Koyama, T., McHaffie, J. G., Laurienti, P. J., & Coghill, R. C. (2005). The subjective experience of pain: where expectations become reality. Proceedings of the National Academy of Sciences, 102(36), 12950-12955. https://www.pnas.org/content/pnas/102/36/12950.full.pdf ↩︎

-

Kleppmann, M. (2017). Designing data-intensive applications: The big ideas behind reliable, scalable, and maintainable systems. “O’Reilly Media, Inc.”. ↩︎

-

Rasmussen, J. (1997). Risk management in a dynamic society: a modelling problem. Safety science, 27(2-3), 183-213. http://sunnyday.mit.edu/16.863/rasmussen-safetyscience.pdf ↩︎

-

This recalls Linus Torvalds’ remark in 2008: “I personally consider security bugs to be just ‘normal bugs.’ I don’t cover them up, but I also don’t have any reason what-so-ever to think it’s a good idea to track them and announce them as something special.” https://yarchive.net/comp/linux/security_bugs.html ↩︎

-

For more on security chaos engineering experiments, either read the SCE ebook or watch my talk “The Scientific Method: Security Chaos Experimentation & Attacker Math” https://www.youtube.com/watch?v=oJ3iSyhWb5U ↩︎

-

One of my finest achievements is being responsible for the term “lizard brain” making it into the Wall Street Journal. Mitchell, H. (2021, September 7). How Hackers Use Our Brains Against Us. The Wall Street Journal. https://www.wsj.com/articles/how-hackers-use-our-brains-against-us-11631044800 ↩︎

-

I grazed the surface of in-groups and out-groups in my blog post “On YOLOsec and FOMOsec” but this particular insight had not yet coalesced in my mind at the time. /blog/posts/on-yolosec-and-fomosec/ ↩︎

-

Molenberghs, P., & Louis, W. R. (2018). Insights from fMRI studies into ingroup bias. Frontiers in psychology, 9, 1868. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC6174241/ ↩︎

-

The OG paper on this dynamic is: Tajfel, H. (1970). Experiments in intergroup discrimination. Scientific American, 223(5), 96-103. https://faculty.ucmerced.edu/jvevea/classes/Spark/readings/tajfel-1970-experiments-in-intergroup-discrimination.pdf ↩︎

-

Shortridge, K., Rinehart, A. (2020). Security Chaos Engineering. United States: O’Reilly Media, Incorporated. https://www.oreilly.com/library/view/security-chaos-engineering/9781492080350/ ↩︎

-

My favorite of these things perhaps being Darth Jar Jar: A Model for Infosec Innovation: /blog/posts/darth-jar-jar-model-infosec-innovation/ followed by Lamboozling Attackers: A New Generation of Deception https://queue.acm.org/detail.cfm?id=3494836 ↩︎

-

For a 101 about reference points in decision making (and about the OG behavioral economics theory, Prospect Theory) I recommend reading the Decision Lab’s guide on it: https://thedecisionlab.com/reference-guide/economics/reference-point/ ↩︎

-

Quoted from https://www.forcepoint.com/cyber-edu/devsecops ↩︎

-

I will spare y’all a diversion into epistemology and instead just cite this somewhat bizarre historical examination of the quote, which, incidentally, serves as another example of the potency of in-group vs. out-group framing: https://www.wsj.com/articles/BL-LB-4558 ↩︎

-

By recent experience I mean reception to my talks, writings, and the Security Chaos Engineering e-book. But the SRE book provides additional supporting evidence by repeatedly underlining the importance of availability to SRE success: https://sre.google/sre-book/service-level-objectives/ ↩︎

-

Ruthberg, Z. G., & McKenzie, R. G. (1977). Audit and Evaluation of Computer Security. https://nvlpubs.nist.gov/nistpubs/Legacy/SP/nbsspecialpublication500-19.pdf ↩︎

-

For instance, a recent infosec Twitter thread suggested people

TAKE THEIR SHIT OFF THE INTERNETas a solution to problems like vulnerabilities in vCenter instances. Its popularity even lead to the creation of a site dedicated to this mindblowing advice: https://www.getyourshitofftheinternet.com/ ↩︎ -

This reference to the title of Lenin’s pamphlet is a chance for me to shoehorn in my quip that status quo information security should adore Marx’s labor theory of value (originally David Ricardo’s) in which goods or services are valued based on the effort that went into producing them rather than based on the consumer’s preferences (I will avoid going down the rabbit hole of decision theory at this juncture). ↩︎

-

I suggest reading Dr. Forsgren’s excellent takedown of maturity models for more on why they are “for chumps”: https://twitter.com/nicolefv/status/1130192402608664576 ↩︎

-

The entire information security market is somewhere between ~$165 billion and ~$185 billion as of 2020. It feels reasonable that at least ~6% of that spending is in security obstructionism. To wit, the vulnerability management market is nearly $14 billion as of 2021, the VPN market is also around $14 billion, and the CASB market (which addresses “shadow IT”) was valued at just under $9 billion in 2020. Smaller categories include the cybersecurity awareness training market at $1 billion in 2021, SAST is less than a billion, and the insider threat market, which is still small enough that Gartner hasn’t sized it yet it seems. I strongly suspect that some percentage of each security market category includes tools that facilitate obstructionism, but that is difficult to quantify. ↩︎

-

Connelly, E. B., Allen, C. R., Hatfield, K., Palma-Oliveira, J. M., Woods, D. D., & Linkov, I. (2017). Features of resilience. Environment systems and decisions, 37(1), 46-50. https://www.osti.gov/pages/servlets/purl/1346540 ↩︎